An Adoption Model for Architectural Decision Making and Capturing

(Updated: )Reading time: 8 minutes

Content Outline

- Motivation

- Model Dimensions

- Level Overview

- Level 1: Undefined and Unconscious

- Level 2: Ad-hoc and Unstructured

- Level 3: Encouraged and Supported

- Level 4: Systematic, Selective and Diligent

- Level 5: Optimized and Rigorous

- Application Hints and Assessment Tool

- Future Directions

- Related Posts

- Summary and Outlook

Architectural Decision (AD) making and capturing are essential tasks for enterprise architects and solution architects; Architectural Decision Records (ADRs) have become increasingly popular. However, principles and practices for AD management and ADR documentation vary. This post proposes five levels of AD adoption, inspired by maturity models in other areas.

Motivation

The scope of architecture work extended its focus from structure to decision rationale over the years, as explained in “The Software Architect’s Role in the Digital Age” (2016). For instance, searching for “decision” in “Open Agile Architecture”, a 2020 standard from The Open Group, returns 164 hits. It refers to one of the popular ADR formats, proposed by Michael Nygard in 2011. Note that there are many more templates, and the concept of AD capturing is about 25 years old now; see “Architectural Decisions — The Making Of” for a partial history.

When working with clients from different business sectors, we observed various AD management habits and practices. This post derives five levels of adoption from these observations. Such grouping helps with managing complexity, which is one purpose of an adoption (or maturity) model.

The specific future use cases for our proposed Architectural Decision Adoption Model include:

- Learn about proven practices: how thoroughly are ADs made and documented elsewhere?

- Reflect and assess whether one’s current approach(es) are adequate for a given context.

- Identify action points en route to improving current practices.

Note that naming matters and is hard… here, we decided for the term “adoption model” and neglected “maturity model” because more is not always better here.1

Let’s start with the model dimensions that we identified.

Model Dimensions

According to our analysis, the AD making and capturing approaches in practice differ in several ways:

- Usage scenario. Why are Architectural Decision Records (ADRs) created, what is their function? Are they created once (after-the-fact) or do they steer the design work and evolve iteratively?

- Scope and scale. Do ADRs remain local artifacts or is there a sharing culture? When and how are ADRs shared: within a single team, across teams, organizational unit, and/or enterprise?

- Structure and location. This aspect deals with documentation. Is a template used to create ADRs? If so, is it minimal or elaborate? How is it established and maintained? Where are the resulting ADR logs located? Are ADRs searchable/findable?

- Process and engagement, including timing. Are architectural significance criteria established? Has a Definition of Done (DoD) been established? Are decision making steps defined? Can Last Responsible Moment (LRM) or Big Design Upfront (BDUF) mentalities be seen, or a Most Responsible Moment (MRM) mindset?2

- Tool support and automation. Are off-the-shelf, general-purpose tools or custom–made, AD-specific tools used to create ADRs? Are these tools integrated with other tools for architecture design and project planning? How manual or automated is the capturing, recording, and reuse of the decisions?

- Review culture. When and how are ADs quality-assured? For instance, are reviews optional or mandatory? Is a formal sign-off and/or external approval required before executing on an AD? Does a date to revisit an ADR have to be defined (or an ADR validity period)?3

- Learning (education, training). How are architects taught how to apply the chosen AD management practices and tools? Is this self-organized or supported by the organization? What kind of training material is compiled and shared? Are official classes available or even mandatory to attend?

We call these AD management characteristics model dimensions or adoption level criteria. The seven dimensions and their combinations define the five levels of our adoption model.

Level Overview

We adopted the five “classic” maturity levels for knowledge management and add(ed) AD domain-specific semantics to them:4

- Undefined and unconscious aka nonexistent/chaotic

- Ad-hoc and unstructured aka initial/reactive/ad-hoc

- Encouraged and supported aka defined/functioning/aware

- Systematic, selective and diligent aka managed/proactive/integrated

- Optimized and rigorous aka effective/automated/ubiquitous/self-managed

As you can tell, we have been unable to decide for a single adjective per level so far. 🤔 Suggestions are welcome!

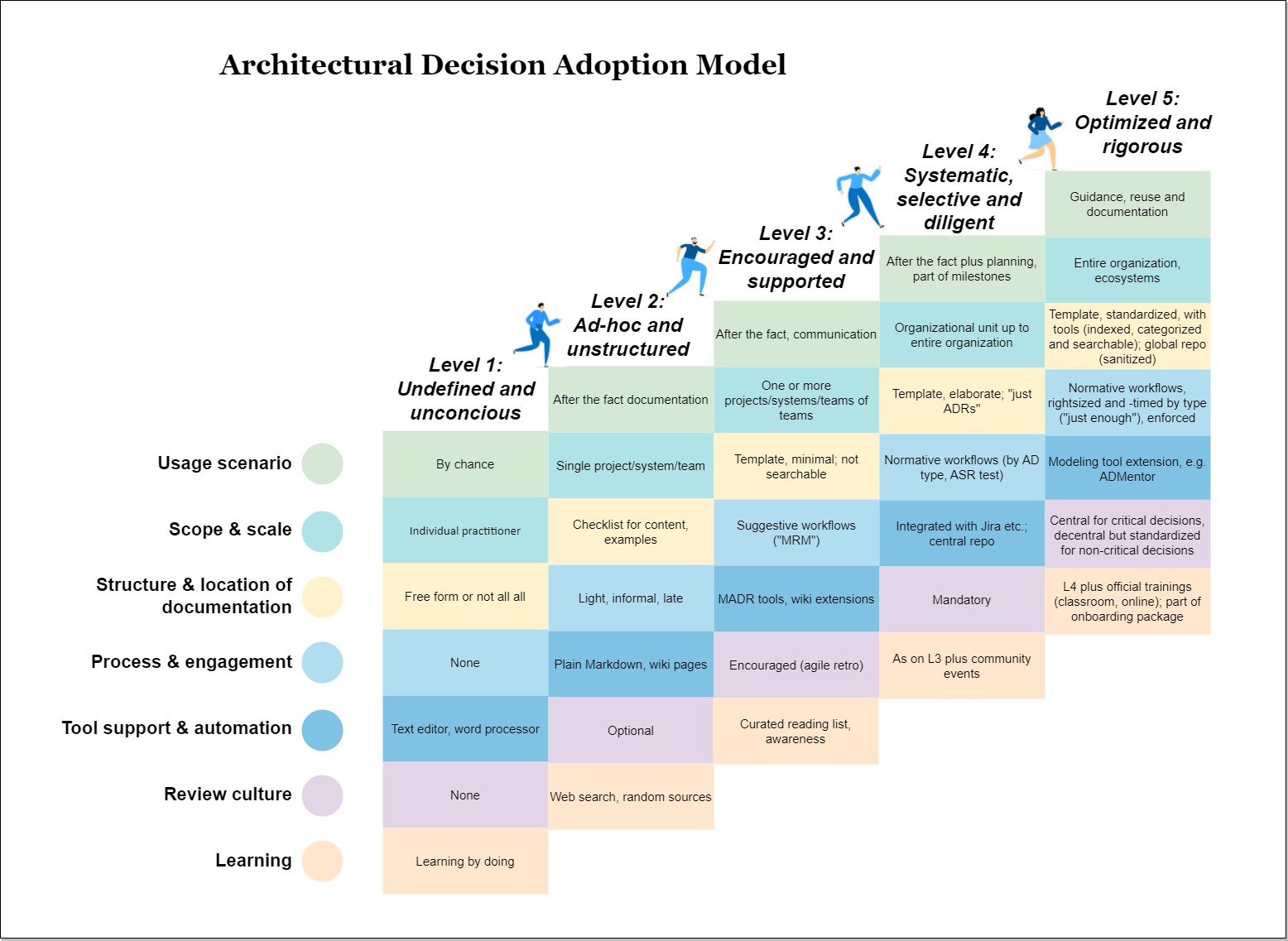

The following figure visualizes the levels and how they do w.r.t. the adoption criteria and dimensions:

Level 1: Undefined and Unconscious

All architectural decisions are made intuitively and unconsciously, embedded in development activities. The architecture is fully emergent. No ADR is documented in any form, or if any one is documented due to a personal, individual initiative, this happens in free form, for instance using a text editor. There is no review process. The usage of these few ADRs is by chance and by the individual architect (after-the-fact). Learning by doing is the only approach to education and training.

Level 2: Ad-hoc and Unstructured

The need for intentional architectural decision making is realized among some teams and a few isolated initiatives have been started; ADRs are created and used by single projects or teams. However, the decision making and capturing process is still ad-hoc and unstructured. Documentation is created after-the-fact; reviewing is optional. The process is not unified among all teams. As far as tools are concerned, there might be checklists for documentation, and plain markdown or wiki pages might be used. Learning is self-organized and basic.

Level 3: Encouraged and Supported

One or more projects/teams use ADRs, still in after-the-fact documentation mode and/or as a means of communication. In many teams, architectural decisions are made systematically, informed and based on Architecturally Significant Concerns (ASCs), including requirements and constraints — desired non-functional qualities in particular. This applies to hard-to-reverse and critical decisions in particular. However, the principle of Just in-time Architecture (JITA), Just Enough Architecture (JEA) is not consistently applied, and the decision making and documentation is still centralized. Reviews are encouraged, but still initiated in a self-organized way. Minimal templates might be used for documentation, possibly supported by light tools such as the (M)ADR tools or homegrown wiki extensions. A collection of recommended readings about ADs and ADRs exists, awareness sessions take place.

Level 4: Systematic, Selective and Diligent

In most teams, ADs are made systematically, depending on their type: informed and driven by ASCs for hard-to-reverse and critical decisions, subconsciously (i.e., embedded in development activities) for easy-to-undo-and-revise decisions. Decision identification is an explicit activity, helping with project planning JITA and JEA are actively and consciously applied in the organization. However, there still is a rather bureaucratic central governance regarding decision making and documentation; teams do not operate autonomously. Elaborate templates are used to document ADRs in a central repository, which is integrated with issue trackers such as Jira or similar tools. ADR reviews are mandatory, ADR-related topic regularly are covered at community events.

Level 5: Optimized and Rigorous

Across the whole organization, hard-to-reverse and critical decisions are identified proactively and made systematically in an informed fashion starting from the ASCs. All teams and organizational units start from a curated set of recurring, common ASCs. Not-hard-to-reverse decisions are made subconsciously (i.e., embedded in other design and development activities). JITA and JEA is actively and consciously alive in the culture. The decision making and documenting process is mostly autonomous, and there is not much central governance except for reviewing critical decisions that affect several organizational units. A standardized, often elaborate template is used to capture the decisions into a global repository which is indexed, categorized and searchable. This repository is connected with architectural modeling tools. ADRs are shared and reused across the whole organization, but also with other actors in the same ecosystem (with sensitive and confidential information eliminated). They may be actively managed and take a guiding role as a design evolves. The ADR review process is documented and enforced, taking the AD type into account; AD boards or communities of practice exist to review critical decisions. AD making and documenting are a part of official training programs and onboarding packages.

Application Hints and Assessment Tool

At this point, you might be wondering whether and how you can leverage our adoption model with its levels, dimensions and the pointers to existing practices that appear in the descriptions.

The first step is the hardest usually… why don’t you just give it a try and answer a “why” question about an existing design informally? Next, you may want to apply one of the existing templates to the decision you just recorded. If you are unhappy with the template, tweak it. Once you are happy with the result, have your ADR reviewed and share it. Once your community has provided feedback and agrees on the initial ADR, make it available to others as a learning example. You just went through the five adoption levels without noticing!

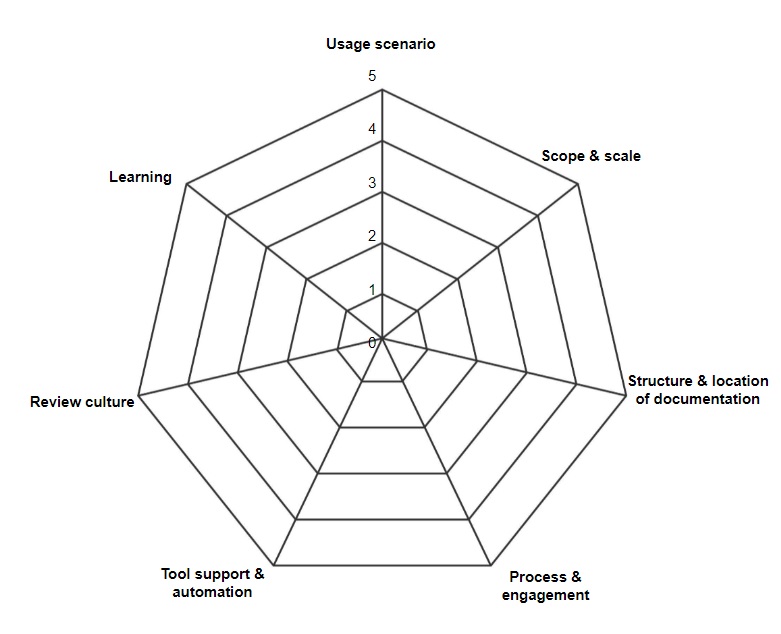

Another way to get started, a little more systematic, is a self assessment. A spider web chart is well suited to visualize the current and desired adoption levels in a team or an organization:

You may want to fill out the spider web for your sphere of influence and then decide for a level. The questions about the “Model Dimensions” above may serve as a checklist while doing so.

Finally, it is worth noting that not all teams and organizations have to go up to Level 5 in our humble opinion; sometimes, less is more. For instance, a small startup merely consisting of a single and small agile development team might be happy to operate on Levels 2 to 3, maybe move to Level 4 when it becomes successful and starts to grow. The 1000+ person architect community of a globally operating firm in an industry that is subject to audit and regulation might decide to strive for Level 5.

Future Directions

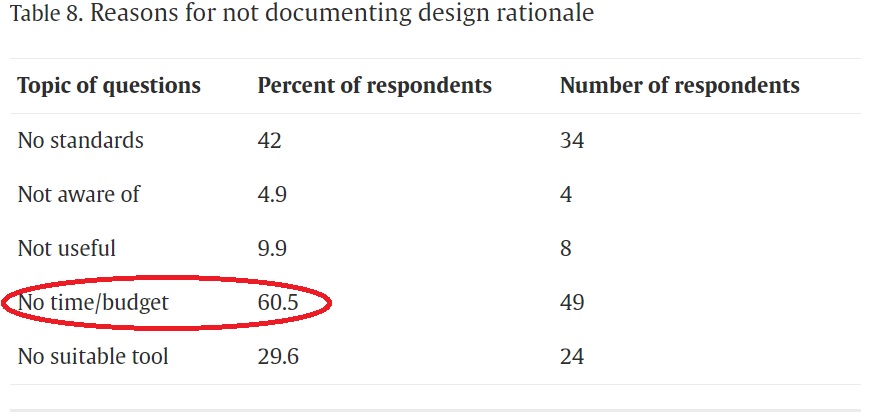

One of the barriers of capturing (and thereby reusing) ADRs has been reported by Antony Tang and his co-authors back in “A survey of architecture design rationale” in 2006 — time and effort is needed of development teams, enterprise architects and CIOs:

How to eliminate this barrier? Automation, information retrieval, natural language processing and machine learning come to mind.

To best of our knowledge, no mature tool has emerged yet that would retrieve ADRs automatically and with high precision from the vast unstructured documents (such as emails, meeting minutes, strategic documents etc.) and then store it — in spite of some efforts and research prototypes in this direction. However, the software architecture research community reports that there is potential, see for instance the master thesis “Using machine learning and natural language processing to automatically extract information from software documentation”. Such efforts could become more attractive now that ChatGPT and similar AI/ML offerings have become available and curiously experimented with.

Therefore, an additional Level 6 “Assisted and automated and ambient” can be envisioned for our model: Easy to use, low-threshold tools are used across the organization to capture intentionally made decisions in a template, possibly processing natural language forms or voice recordings. Such tools would convert the plain text into structured information and then commit it to a central repository. Other tools would extract decisions automatically from informal documents (meeting minutes etc.) or unveil unconsciously made decisions in the source code. Leveraging the central repository, a central design administrator can periodically review ADRs and structure them differently if needed.5 The central repository can then be searched and continuously improved jointly. The book chapter “Decisions Required vs. Decisions Made. Connecting Enterprise Architects and Solution Architects via Guidance Models” proposes additional capabilities.

Related Posts

When starting to practice AD capturing and sharing and going up the adoption level staircase, the following posts in this blog might be useful:

- How to create ADRs — and how not to, new in 2023.

- Architectural Significance Test and Some Core Decisions provides a checklist with five plus two significance indicators (2020).

- Architectural Decisions — The Making Of, introducing the rather light Y-statement template (2020).

- The Markdown ADR (MADR) Template Explained and Distilled

- A Definition of Done for Architectural Decision Making, proposing five “done” criteria (2020).

- How to review ADRs — and how not to, new in 2023.

“A Simple Framework for Architectural Decisions” at InfoQ reports that ADR are becoming increasingly popular, for instance at Amazon Web Services and Google Cloud Platform.

Summary and Outlook

ADRs are relatively easy to get started with, compared to design structure-centric notations and formats. This is one of the reasons they became popular, which also explains why practices for AD making, capturing and sharing vary quite a bit. To be able to benefit from AD recording and sharing, architecting skills and experience are required, which include communication skills and experience.

Adoption levels and dimensions help to find your way and pick the practices that have the best cost-benefit ratio in your context. We proposed seven adoption model dimensions in this post: usage scenario, scope and scale, documentation rigor and location, process and engagement, tool support and automation, review culture, learning and education. The five levels of our AD Adoption Model that we derived from observing current AD practices with the help of these dimensions are:

1. Undefined and unconscious

2. Ad-hoc and unstructured

3. Encouraged and supported

4. Systematic, selective and diligent

5. Optimized and rigorous

We also envisioned an extra Level 6 leveraging next-generation, semi-intelligent ADR automation tools and assistants (but did not go up to 11 😉).

To get started, how about reflecting how you and your current project do with respect to the seven model dimensions? And then filling out the spider diagram, for the as-is and the to-be situation?

We hope you find our model and this post useful. Is anything missing? Let us know!

– Olaf (Zimmermann) and Mohsen (Anvaari)

October 10, 2024: There is a Medium version of this post now.

Acknowledgements

Mirko Stocker, Stefan Kapferer reviewed an earlier version of this post and/or shared their experience regarding ADRs with us. Justus Bogner and Christian Ringler reviewed the June 21 version. Several global architect communities at clients, partners and employers provided input and inspiration as well.

Notes

-

Is this naming decision worth capturing with the help of a template or is plain text good enough here? ↩

-

In other words, can a “(too) much architecture approach” (i.e., proactive/intentional decision making in all cases) be observed or is a “just enough” approach to architecture design practiced (i.e., proactive/intentional decision making for hard-to-reverse decisions, reactive/reactive decision making for decisions that are easy to reverse)? ↩

-

Note the difference between AD and ADR (see definitions in this post). ↩

-

For a general model and level names, refer to the conference paper “Development and Application of a General Knowledge Management Maturity Model”, Proc. of PACIS 2006 Proceedings, AIS Electronic Library (AISeL). ↩

-

Is this a new enterprise architect responsibility? ↩